BotTalk Introduces Text to Speech Voices by OpenAI into the Audio Hub

Table of contents

1. BotTalk introduces OpenAI TTS

2. Evolution of TTS Provider in BotTalk

BotTalk introduces OpenAI TTS

Since BotTalk Audio Hub was established in 2019, our unwavering mission has been to furnish news publishers with premier AI voice generation technology, bringing written content to life through realistic AI voices. Recognizing the pivotal role of high-fidelity audio in captivating diverse audiences, we've consistently strived to bridge the gap between content and its auditory reception across various languages and accents.

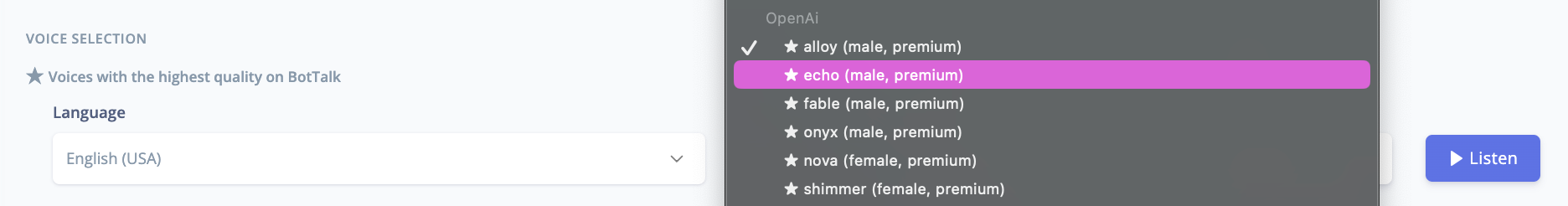

The introduction of OpenAI's lifelike Text-to-Speech (TTS) voices marked a significant milestone in our journey. With an eye for pioneering solutions, we promptly embraced OpenAI's advanced voice technology, integrating it into BotTalk Audio Hub. This integration empowered our users with unparalleled control over their audio content's creation, simplifying the transformation of text into natural, engaging speech.

This collaboration highlighted our dedication to accessibility and innovation, offering a seamless transition for our users without the need for additional IT resources or complex technical adjustments. A mere click within our platform's settings was all it took to harness OpenAI's TTS capabilities, instantly elevating written content with voices characterized by humanlike intonation and clarity.

OpenAI's generative AI, notably the voice feature announced alongside ChatGPT's evolution into verbal interactions, brings a new level of interactivity to AI-assisted communication. OpenAI's collaboration with voice actors to create diverse voices and its partnership with Spotify for voice-translated podcasts exemplifies the technology's vast potential for creative and inclusive applications.

As BotTalk Audio Hub integrates these advancements, we not only enhance our platform's capabilities but also commit to exploring further innovations in AI voice technology. Our collaboration with OpenAI is just the beginning of a thrilling path toward making AI-generated audio content more dynamic, accessible, and versatile for publishers and their audiences worldwide. We eagerly anticipate the future developments in this domain, ensuring that BotTalk remains at the cutting edge of AI voice generation, facilitating engaging and diverse auditory experiences for all.

And yes, this blog post has been audified using OpenAI Voice. Take a listen, it's amaizing, we promise!

Evolution of TTS Provider in BotTalk

But let's step back and see how the quality of Text-to-Speech evolved over the years.

Reflecting on the evolution of Text-to-Speech (TTS) providers within BotTalk, it's clear that our journey has been nothing short of remarkable. We embarked on this path with the integration of Amazon Polly Voices, a pioneering force in modern TTS technology.

Responding to our users' needs, when Google unveiled its neural TTS voices in the Wavenet product, we added them as a TTS provider to the BotTalk Audio Hub. Then, about eight months later, Microsoft introduced Azure TTS Voices, prompting us to integrate them as well.

Each transition was seamless for our customers, requiring no additional IT resources and preserving the same user-friendly API and integrated player.

Amazon Polly

In the early stages of BotTalk's journey in the realm of publishing and audio content, our exploration of Text-to-Speech (TTS) technology led us to incorporate Amazon Polly Voices, known for their reputation as one of the best AI voice generators available.

Amazon emerged as a trailblazer in the TTS technology landscape, riding the wave of excitement generated by the launch of their smart assistant, Amazon Alexa. Motivated by the widespread acclaim for Alexa, we aimed to embed the same caliber of voice technology within BotTalk, offering our users unparalleled quality in voice synthesis.

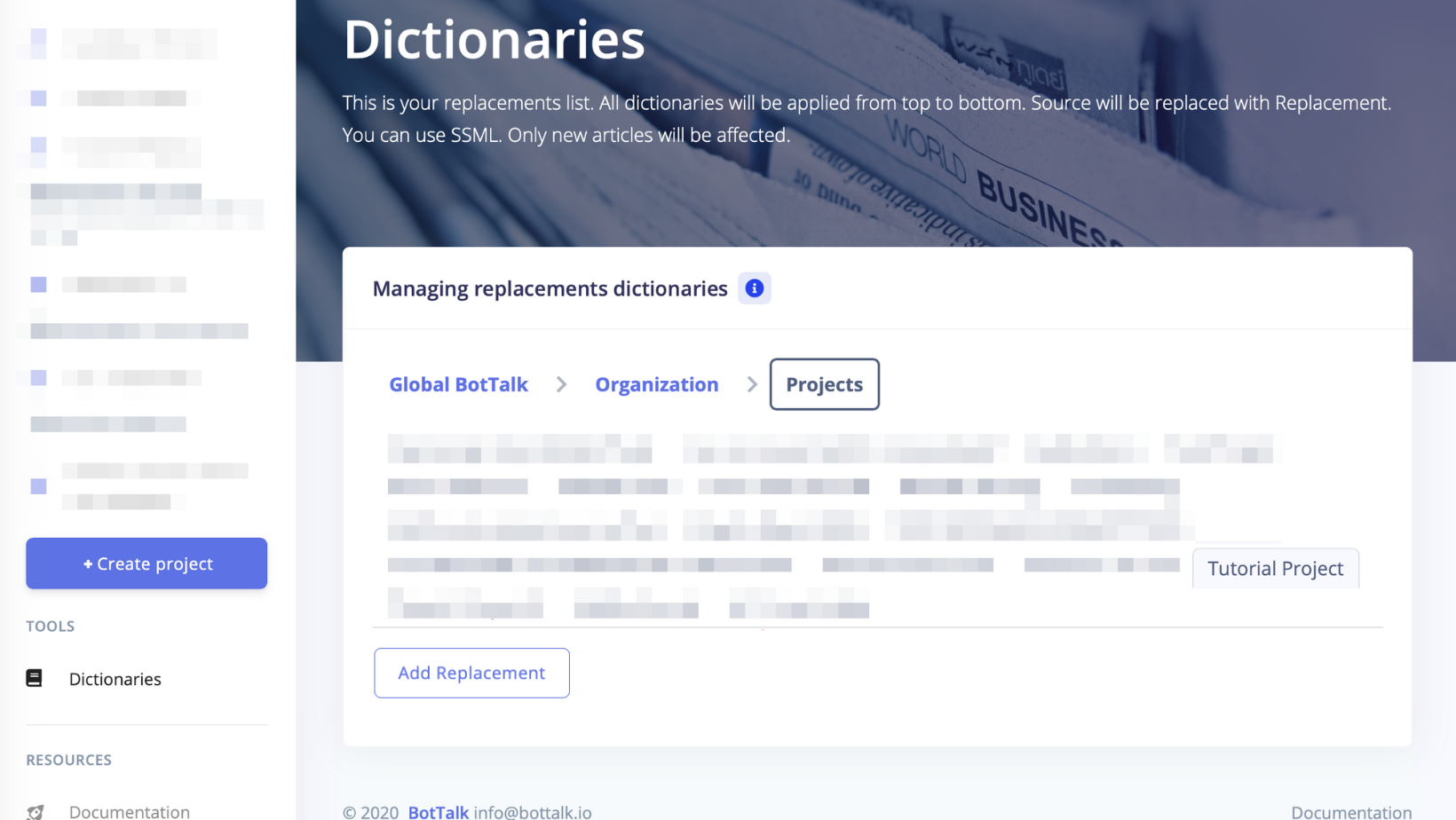

Shortly after integrating Amazon Polly, we observed firsthand the challenges faced when converting text to speech, particularly with the pronunciation of local German names and geographic locations. This insight led to the rapid development and implementation of a highly requested feature from our initial user feedback: Dictionaries. This feature empowered our users to customize pronunciations easily, providing a simple and intuitive user interface for enhancing speech accuracy.

The evolution of Amazon Polly since its announcement in 2016 has played a significant role in our continuous improvement. AWS introduced Polly as a managed service that revolutionized text-to-speech conversion, enabling the creation of speech-enabled applications and products without necessitating machine learning expertise. Just by calling an API, developers could leverage this service to enhance their applications. Over time, Polly expanded its voice options to include 29 languages and 59 different voices, demonstrating AWS's commitment to diversity and inclusivity in voice technologies.

Moreover, AWS's introduction of Neural Text-To-Speech (NTTS) and the newscaster style feature marked a pivotal advancement in speech synthesis. NTTS, through a novel machine learning approach, significantly enhances the naturalness and expressiveness of synthesized speech, bringing it ever closer to human-like quality. This improvement is available for 11 voices, including all 3 UK English voices (Amy, Emma, and Brian) and all 8 US English voices (Ivy, Joanna, Kendra, Kimberly, Salli, Joey, Justin, and Matthew), both in real-time and batch mode. The NTTS technology also introduced the capability to apply various styles to synthesized speech, further enriching the auditory experience.

With these advancements, BotTalk has been able to offer our users the ability to create audio content that not only resonates with a broad spectrum of listeners but also enhances their publishing capabilities. By addressing the initial challenges and continuously integrating cutting-edge features like NTTS and dictionaries, BotTalk has significantly broadened its reach in the business and publishing world, cementing our commitment to innovation and user satisfaction in the digital content creation domain.

Let's take a listen on how Amazon Polly sounds like today:

Google Wavenet

Our journey experienced a pivotal moment when, a few months following our initial integration of Amazon Polly voices, Google unveiled a transformative update to their voice models with the introduction of neural TTS voices in their Wavenet product. This advancement firmly positioned Google as a frontrunner in the development of realistic AI voices, showcasing their commitment to innovation in speech technology.

BotTalk, ever in pursuit of leveraging the finest AI voice generator technology, swiftly incorporated Wavenet into our Audio Hub. This addition significantly widened the selection of TTS providers available to our users, enhancing their ability to generate speech from text with greater diversity in voice options.

This strategic enhancement was particularly well-received by some of our German customers, who quickly recognized the superior quality of Wavenet's humanlike voices for engaging their varied audiences. Our decision to adopt Wavenet underscored our dedication to granting users comprehensive control over the speech synthesis process. We aimed to ensure that the generated audio not only sounded natural but also captured the intricate nuances of human speech, including the ability to insert pauses as desired.

Google's Wavenet, introduced by DeepMind in late 2016, represented a significant leap forward in TTS technology. It is a neural network that learns the intricacies of speech patterns and waveform shapes from a vast dataset of speech samples. During its training phase, Wavenet deciphers the structural elements of speech, understanding sequence and form to produce highly realistic speech waveforms from textual input. The updated version of Wavenet, now running on Google’s Cloud TPU infrastructure, boasts an impressive capability to generate raw waveforms 1,000 times faster than its predecessor, rendering one second of speech in merely 50 milliseconds. Not only has the speed improved, but the fidelity of these waveforms has also been enhanced, with a sampling rate of 24,000 samples per second and an increased resolution from 8 to 16 bits per sample. This results in higher quality audio that more closely mimics human speech.

Through the inclusion of Google Wavenet, BotTalk has broadened the horizons for our users, providing them with access to a richer array of voice options across multiple languages and accents. This enables the creation of compelling audio content that effectively resonates with audiences, further amplifying our users' reach within the dynamic spheres of publishing and business.

Let's take a listen on how Wavenet voice sounds today:

Microsoft Azure

Roughly eight months after embarking on our journey to enhance the publishing and AI-driven audio content landscape, Microsoft launched a pivotal innovation with their Azure TTS Voices. This introduction represented a significant milestone in our continuous search for the premier AI voice generator technology. BotTalk swiftly adopted Azure as a TTS provider, broadening our platform's spectrum of realistic AI voices and offering our users an expansive selection of voice options.

This addition had a particularly strong impact on the German publishing sector, with numerous publishers opting for Azure TTS Voices as their primary tool for converting text to speech. Microsoft's breakthrough in neural TTS has significantly advanced the quality of computer-generated voices, rendering them nearly indistinguishable from human recordings. With their neural TTS technology, Microsoft has achieved a natural prosody and clear articulation that notably diminishes listener fatigue during interactions with AI systems.

This neural-network-powered text-to-speech capability has set new standards in speech synthesis.

By integrating Azure TTS Voices, BotTalk has not only expanded the diversity of voices available to our users but also reaffirmed our dedication to enabling the creation of more natural, engaging audio content. This move further solidifies our leadership in providing cutting-edge solutions in the ever-evolving domains of publishing and business audio content creation.

Here is how the quality of Azure TTS now:

The future of synthetic speech - Integration of Text to Speech Voices by OpenAI into the BotTalk's Audio Hub

The integration of OpenAI's Text-to-Speech Voices into BotTalk's Audio Hub marks a significant milestone in the evolution of synthetic speech technology, providing our users with unparalleled access to the finest AI voice generation capabilities. Throughout our journey, transitioning between various TTS providers, the effortless experience offered to our customers stood out. The ease with which users could adopt OpenAI's voices—requiring no additional IT support or intricate configurations—was a mere click away, highlighting the user-friendly nature of our platform.

OpenAI's recent advancements in voice technology have led to the creation of highly realistic synthetic voices, capable of generating speech from a brief sample of real speech. This innovation not only facilitates a range of creative and accessibility-enhancing applications but also introduces considerations regarding the potential misuse of such technology. Recognizing these challenges, OpenAI has judiciously applied this technology to specific use cases like voice chat, working closely with voice actors and collaborators such as Spotify.

Reflecting on the quality of OpenAI voices now, our enthusiasm is palpable. We foresaw a future back in 2019 where distinguishing between human and synthetic voices would become increasingly difficult within a mere 3 to 5 years. Today, we stand at the threshold of that prediction, with OpenAI's voices achieving a level of naturalism and human likeness that meets our users' diverse creative requirements. These voices can adeptly handle multiple languages and accents, capturing the subtle nuances of human speech, including the intentional placement of pauses.

This integration is a testament to the latest breakthroughs in synthetic voice research, offering publishers and businesses invaluable tools to engage their audiences with audio content that genuinely connects. As we embrace this era where the distinction between human and synthetic voices blurs, BotTalk remains at the forefront, committed to leveraging cutting-edge technology to empower our users to create captivating and authentic auditory experiences.